GTC Nvidia’s strategy for capitalizing on generative AI hype: glue two H100 PCIe cards together, of course.

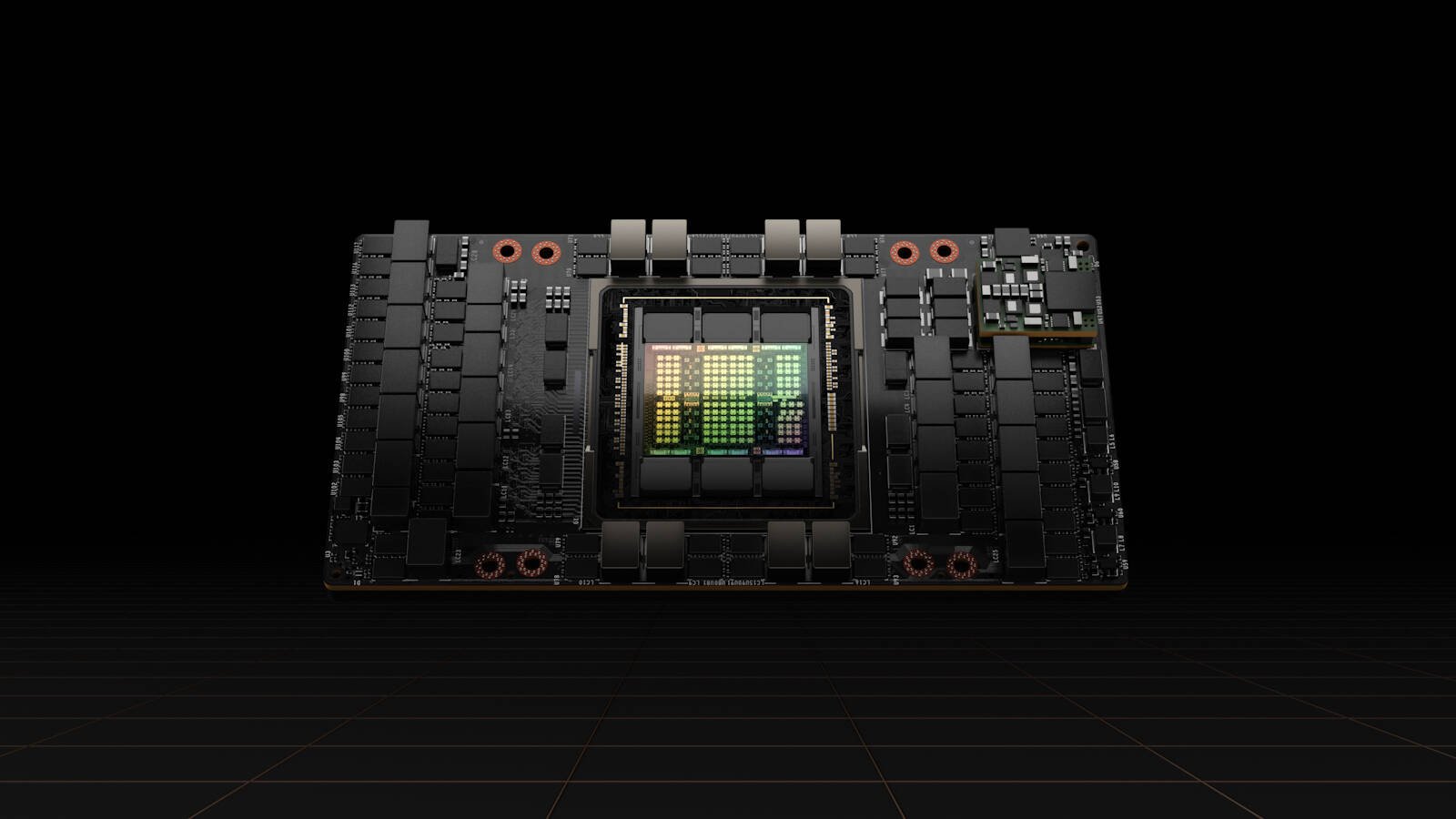

At GTC this week, Nvidia unveiled a new version of its H100 GPU, dubbed the H100 NVL, which it says is ideal for inferencing large language models like ChatGPT or GPT4. And if it looks like two H100 PCIe cards stuck together, that’s because that’s exactly what it is. (Well, it’s got faster memory too, more on that later.)

“These GPUs work as one to deploy large language models and GPT models from anywhere from five billion parameters to 200 [billion],” Nvidia VP of Accelerated Computing Ian Buck said during a press briefing on Monday.

The form factor is a bit of an odd one for Nvidia which has a long history of packing multiple GPU dies onto a single card. In fact, Nvidia’s Grace Hopper superchips basically do just that, but with a Grace CPU and Hopper GH100. If we had to guess, Nvidia may have run into trouble packing enough power circuits and memory onto a standard enterprise PCIe form factor.

Speaking of form factor, the frankencard is massive by any stretch of the imagination, spanning four slots and boasts a TDP to match at roughly 700W. Communication is handled by a pair of PCIe 5.0 x16 slots, because this is just two H100s glued together. The glue in this equation appears to be three NVLink bridges which Nvidia says are good for 600GB/s of bandwidth — or a little more than 4.5x the bandwidth of its dual PCIe interfaces.

While you might expect performance on par with a pair of H100s, Nvidia claims the card is actually capable of 2.4x-2.6x the performance, at least in FP8 and FP16 workloads.

That performance can likely be attributed to Nvidia’s decision to use faster HBM3 memory instead of HBM2e. We’ll note that Nvidia is already using HBM3 on its larger SMX5 GPUs. And the memory doesn’t just have higher bandwidth — 4x compared to a single 80GB H100 PCIe card — there’s also more of it: 94GB per die.

The cards themselves are aimed at large-language model inferencing. “Training is the first step — teaching a neural network model to perform a task, answer a question, or generate a picture. Inference is the deployment of those models in production,” Buck said.

While Nvidia already has its larger SXM5 H100s in the wild for AI training, those are only available from OEMs in sets of four or eight. And at 700W a piece, those systems are not only hot, but potentially challenging for existing datacenters to accommodate. For reference, most colocation racks come in at between 6-10KW .

By comparison, the H100 NVL, at 700W, should be a bit easier to accommodate. By our estimate a single socket, dual H100 NVL system (four GH100 dies) would be somewhere in the neighborhood of 2.5KW.

However, anyone interested in picking one of these up is going to have to wait. While Nvidia may have taken the easy route and glued two cards together, the company says its NVL cards won’t be ready until sometime in the second half of the year.

What if you don’t need a fire-breathing GPU?

If you’re in the market for something a little more efficient, Nvidia also released the successor to the venerable T4. The Ada Lovelace based L4, is a low profile, single slot GPU, with a TDP nearly 1/10th that of the H100 NVL at 72W.

Nvidia’s L4 is a low-profile, single slot card that sips just 72W

This means the card, like its predecessors, can be powered entirely off the PCIe bus. Unlike the NVL cards, which are designed for inferencing on large models, Nvidia is positioning the L4 as its “universal GPU.” In other words, it’s just another GPU, but smaller and cheaper so it can be crammed into more systems — up to eight to be exact. According to the L4 datasheet, each card is equipped with 24GB of vRAM and up to 32 teraflops of FP32 performance.

“It’s for efficient AI, video, and graphics,” Buck said, adding that the card is specifically optimized for AI video workloads and features new encoder/decoder accelerators.

“An L4 server can decode 1040 video streams coming in from different mobile users,” he said, leaving out exactly how many GPUs this server needs to do that or at what resolution those streams are.

This functionality lines up with existing 4-series cards from Nvidia, which have traditionally been used for video decoding, encoding, transcoding, and video streaming.

But just like its larger siblings, the L40 and H100, the card can also be used for AI inferencing on a variety of smaller models. To this end, one of the L4’s first customers will be Google Cloud for its Vertex AI platform and G2-series VMs.

The L4 is available in private preview on GCP and is available for purchase from Nvidia’s broader partner network.