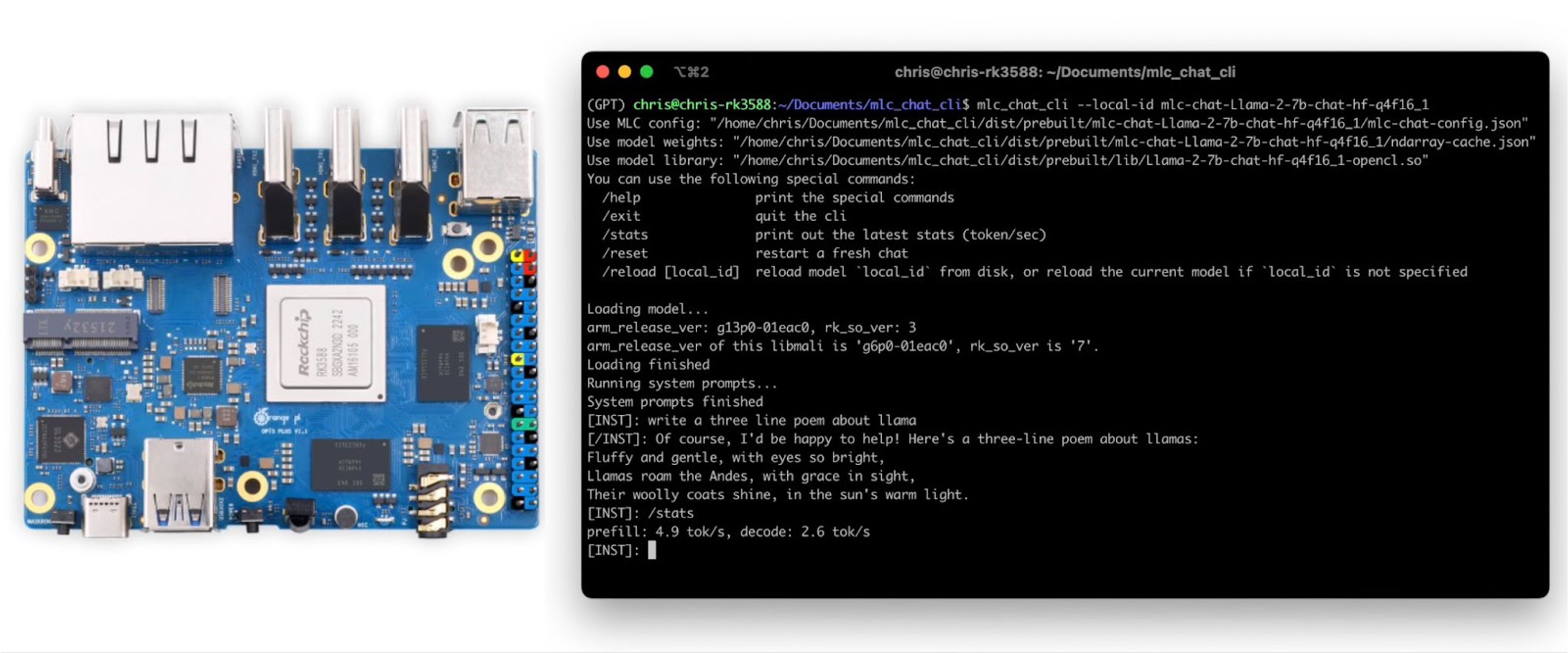

GPU-Accelerated LLM on a $100 Orange Pi

TL;DR This post shows GPU-accelerated LLM running smoothly on an embedded device at a reasonable speed. More specifically, on a $100 Orange Pi 5 with Mali GPU, we achieve 2.5 tok/sec for Llama2-7b and 5 tok/sec for RedPajama-3b through Machine Learning Compilation (MLC) techniques. Additionally, we are able to run a Llama-2 13b model at 1.5 tok/sec on a 16GB version of the Orange Pi 5+ under $150. Background Progress in open language models has been catalyzing innovation across question-answering, translation, and creative tasks....