An AWS engineer recently wrote about how Amazon deployment pipelines look and what practices they follow to deploy continuously to a production environment. A pipeline validates changes in multiple pre-production environments running unit and integration tests, and use stages to stagger deployments to production. Developers don’t actively examine deployments as the pipeline monitors key metrics and can rollback if needed. Also, developers model their pipelines as code that can inherit common configurations.

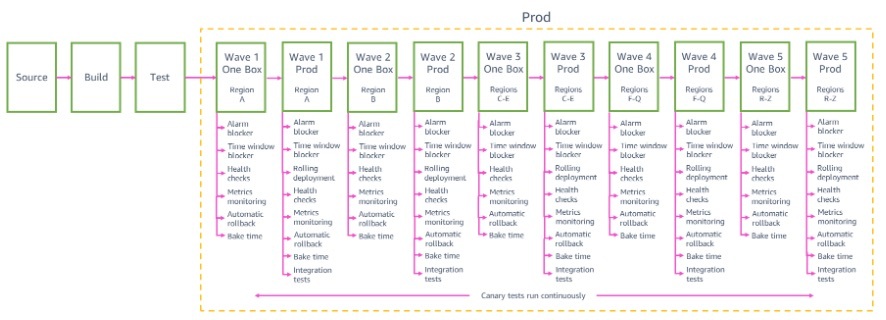

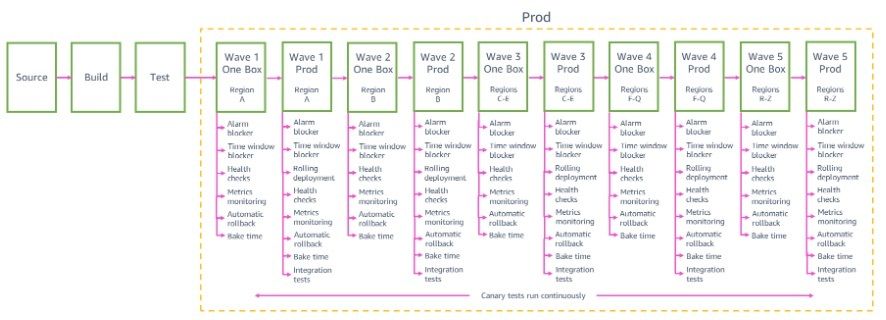

Typically, a microservice consists of multiple deployment pipelines for decoupling purposes and to reduce deployment time. For instance, the pipelines could be one for the application code, one for the infrastructure, one for OS patches, one for configurations, and one for operator tooling. Each pipeline consists of at least four phases, including source, build, test, and prod.

Source: “Automating safe, hands-off deployments” blog post

In the build stage, the pipeline compiles the code, runs unit tests, runs static analysis, assesses code coverage, and stores artifacts. In the test stage, the pipeline validates changes in multiple pre-production environments like alpha, beta, and gamma; and it’s here where they practice deployments to different zones and regions. Finally, the team splits the pipeline into smaller deployment stages to limit the impact in case of a failure. Before moving to the following stage, the pipeline monitors the service for some time to ensure service availability.

Source: “Automating safe, hands-off deployments” blog post

When a developer finishes coding and has validated the changes in a personal AWS account using unit and integration tests, someone else in the team has to review it. A reviewer makes sure that the code is correct, there are enough tests, it is sufficiently instrumented , and changes are backward compatible to ensure rollbacks . Code reviews are the last manual process, and a critical step, because when the code lands to the main branch, the deployment pipeline triggers automatically. Although, there might be times when additional approvals are needed before going live, like a change in the user interface. Clare Liguori , principal software engineer from Amazon Web Services shared more details about the review process :

We do trunk-based development, so the pipeline only deals with the main branch. We rarely create branches. An auto-backup tool pushes your local commits to a remote git ref, and code review submission creates a remote git ref of your proposed changes. Code reviews run unit tests.

Moreover, some deployment pipelines can have time windows to indicate when a deployment can start and reduce the risks of causing adverse impacts. According to Liguori, this set of time windows help them have “less engagement with on-call engineers outside of the regular working hours, and make sure that a small number of changes are bundled together while the time windows are closed.”

InfoQ recently talked to Clare Liguori , principal software engineer for AWS container services, to learn more about how Amazon does continuous deployments.

InfoQ: What happens when there are ongoing issues in production?

Clare Liguori: For an ongoing issue in production, we focus first on rolling back the specific pipeline stages that show impact (like the affected Availability Zone or Region) to mitigate the impact as quickly as possible. If we find that the impact’s root cause is not specific to a particular pipeline stage, then we will roll back all waves in the pipeline that have the change deployed.

InfoQ: How do teams decide which type of tests to run at every stage?

Liguori: The pipeline always runs the full set of tests regardless of the size of the change. Even the smallest change can cause bugs or performance regressions in unexpected places, so the pipeline makes sure that all the unit tests, functional tests, and integration tests run on every change. For large test suites that could take a long time to run, we can speed up the pipeline and get changes to production faster by breaking up the test suite into multiple, smaller test suites that run in parallel in the pipeline.

InfoQ: How does a team deal with bottlenecks in code reviews?

Liguori: All changes going to production must be code reviewed, which is enforced by the pipeline. To avoid code reviews becoming a bottleneck, we prefer breaking up feature work into multiple small code reviews that can be merged and shipped to production individually, rather than submitting a single large code review that contains many code changes. Small code reviews are more comfortable for team members to review between tasks, so this practice lets us review and ship code reviews more continuously.

InfoQ: On what scale do developers have their copy of the production environment running in their AWS accounts?

Liguori: The development setup for testing local changes depends on the team’s needs, preferences, and architecture. For example, many microservices can be tested by running the service locally in a container and using a single “development” database in an AWS account shared by the team to store test data. Other teams prefer for each team member to have their small development database in separate AWS accounts. Depending on the architecture and the change that needs to be tested, other resources like a Step Functions workflow or an SQS queue might be created in a development account as well.